Idea

Efficient WAN federation of resources would provide several important benefits:

- Improve robustness - In case data are missing or corrupted at one site job would not fail but just access it directly from other site.

- Increase resources - If remote access would be almost as efficient as the local one, duplication of the data at different site would be avoided thus leaving more free space.

- Improved hardware utilization. Spare CPU capacity of one site could take over jobs whose input data are at other site

- Open the way for the big scale analysis services (SSS->SkimSlimService)

Where are several reasons for this test framework:

- WAN IO performance is changing all the time as function of packet routing, bandwidth, WN and SE load.

- We want to easily test impact of any changes we will make on: ROOT file organization/setting, ROOT/Athena version used, way we use the files.

- Need a way to compare different hardware, hardware and software site configurations. Optimize sites.

Access is open not only to ATLAS community but also ROOT collaborations. We are also open for communication with sites.

A short description of how stuff works underneath can be found

here.

HC side of things

There is a functional HammerCloud test set up which submits a test job to the most of large sites.

HummerCloud tries to always keep one test in the queue at ANALY queue of the sites. This usually means that sites run roughly 100 jobs per day. Tests are running continuously.

HC just submits the job using Ganga and there is no special settings, so we are seeing the same performance as any normal grid analysis job.

Whatever a site does for normal analysis job it does the same thing for the test jobs.

Tests

Each job submitted looks up from the database a list of "server" sites - sites that are available to provide the data. Each of server sites is tested in different scenarios. Currently these are:

- Ping - to establish if site is up at all and know its "distance"

- direct copy test file is copied using relevant mechanism (now xrdcp)

- 100% default cache simple ROOT script reads sequentially 100% of events with TTreeCache set to default value of 30MB.

Results

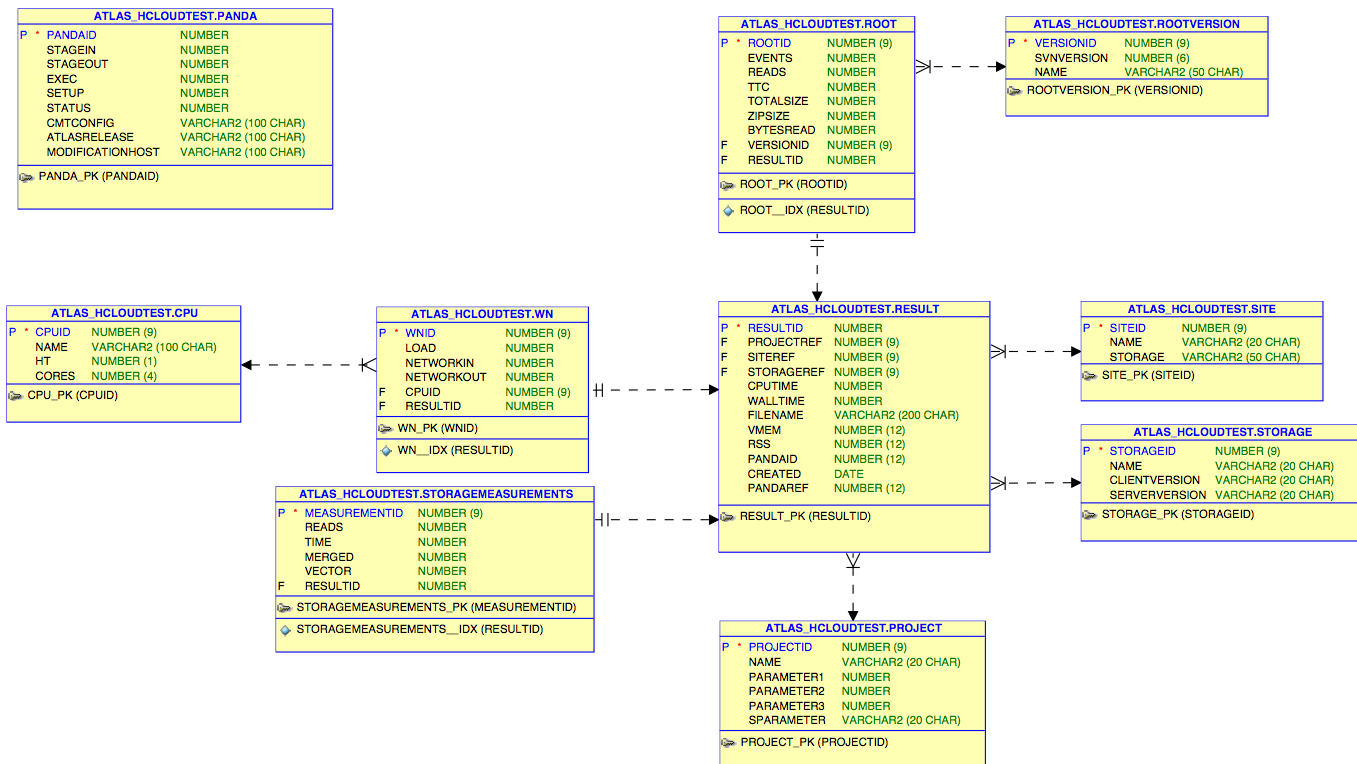

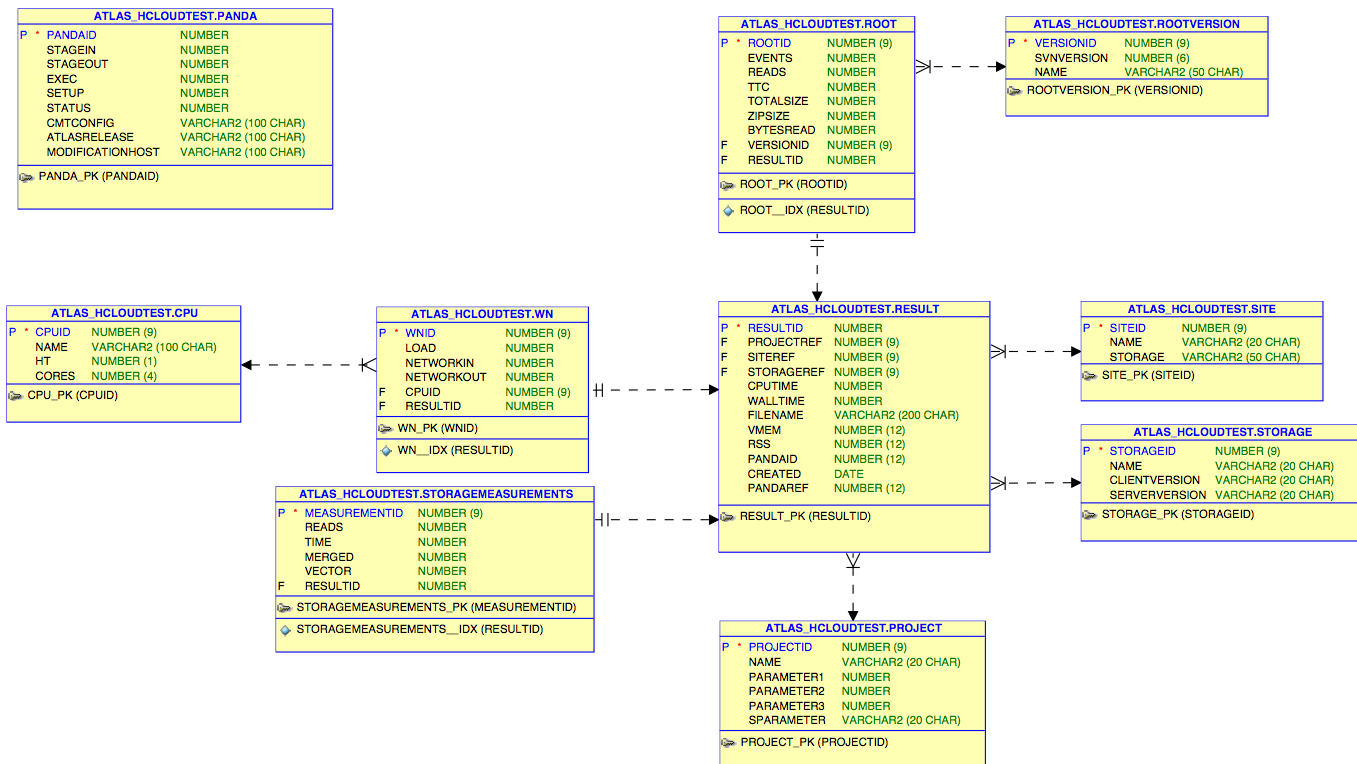

Results are automatically uploaded to ORACLE database in CERN. The DB schema:

While the most of variables stored are self-explanatory I will try to find time to describe exactly how each of them is obtained.

For now it is important to explain content of the db table named "panda". HC test jobs collect pandaID. This ID is used by the cron job which runs every one hour to look up the pilot collected information from the panda monitor. From all of the panda monitor information we store: timing information (stage-in, stage-out, setup and exec times), job status, machine name, cmtconfig and atlas release. It is important to notice that the timing information is for all of the test.

Web site

Even still in development, this web site can be used to look up the most important information. If you need an additional information that can be looked up from the db and is not available through the site let me know and I can give it to you directly. Please feel free to send me any comments, suggestions, feature requests.

While the most of variables stored are self-explanatory I will try to find time to describe exactly how each of them is obtained.

For now it is important to explain content of the db table named "panda". HC test jobs collect pandaID. This ID is used by the cron job which runs every one hour to look up the pilot collected information from the panda monitor. From all of the panda monitor information we store: timing information (stage-in, stage-out, setup and exec times), job status, machine name, cmtconfig and atlas release. It is important to notice that the timing information is for all of the test.

While the most of variables stored are self-explanatory I will try to find time to describe exactly how each of them is obtained.

For now it is important to explain content of the db table named "panda". HC test jobs collect pandaID. This ID is used by the cron job which runs every one hour to look up the pilot collected information from the panda monitor. From all of the panda monitor information we store: timing information (stage-in, stage-out, setup and exec times), job status, machine name, cmtconfig and atlas release. It is important to notice that the timing information is for all of the test.